5 AI Signals Strategic Leaders Can't Ignore

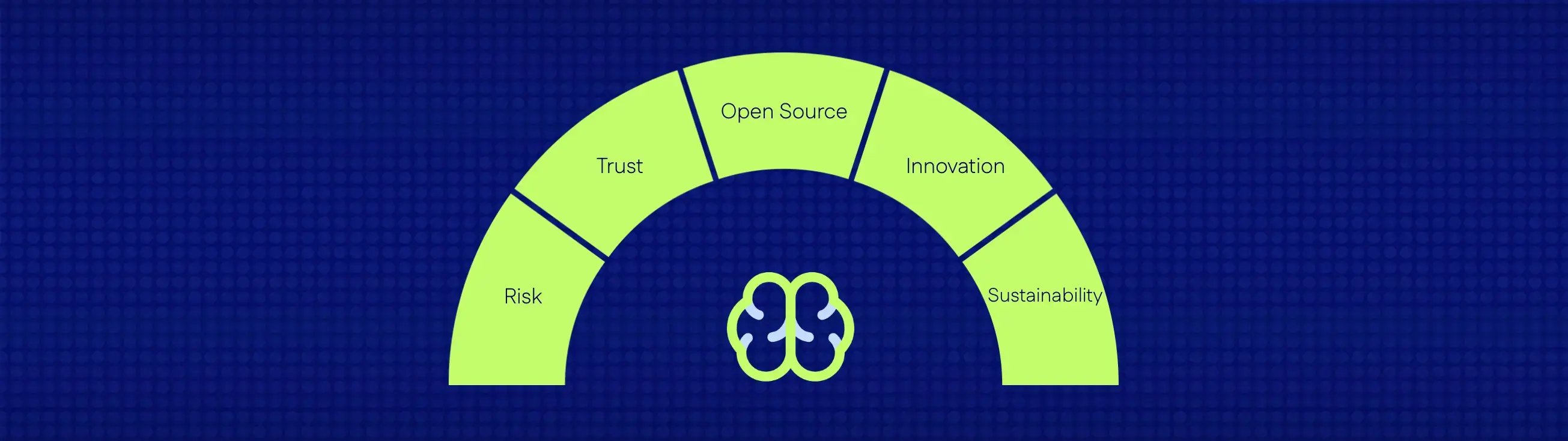

AI is one of the defining technologies of our time, but strategic clarity is still scarce. Most executives understand that AI will shape their future. Yet many are unsure where to start, what to prioritize, or how to cut through the technical jargon and hype.

The noise is overwhelming, the stakes are high, and the window for action is narrowing. This article focuses on helping you build a future-ready AI strategy, one grounded in insight, not buzzwords.

To kick things off, here are five of the most strategic takeaways from Stanford's latest AI Index, and why they deserve a place on your leadership radar.

1. AI costs have collapsed. Now it’s a strategic, not technical challenge

In just 18 months, the cost of running a GPT-3.5-level AI model has dropped from $20 to $0.07 per million tokens, representing a staggering 280-fold decrease.

This dramatic drop has removed a major barrier to entry: powerful AI is no longer reserved for Big Tech. Today, almost any organisation can access powerful tech models at a fraction of the previous cost.

That means the real barrier is no longer technical. It’s strategic. Many companies still struggle with clarity on where AI can create real value, and alignment across teams on how to test and scale it. Without this, even the best technology delivers little impact.

Leaders need to move past abstract ambition and ask concrete questions:

- Which decisions or workflows could AI improve today?

- Where do we face scale, complexity, or repetitive tasks?

- Who needs to be involved in testing and rollout?

Begin with targeted experiments in areas like customer service, planning, or internal knowledge management. You don’t need a lengthy 12-month roadmap; you simply need to take your first confident step. Our AI for Innovation service helps teams identify high-impact AI use cases and move from pilot to scale.

2. Trust is declining, yet use is increasing

AI adoption is accelerating. In 2024, 78% of companies reported using AI, compared from just 55% the year before.

However, public trust in AI providers has decreased, and incidents related to AI have risen by 56%.

This trust gap has important strategic implications. Responsible AI (RAI) isn’t just about avoiding regulatory trouble, it’s about protecting trust with customers, employees, and stakeholders. When AI makes decisions that affect people’s lives or work, confidence in those systems becomes essential.

Despite this, many organizations continue to view RAI as a technical issue or a compliance checklist. It is much more than that. It’s a leadership concern.

Executives need to ensure that governance, ethics, and transparency are baked into AI initiatives from day one, not added as an afterthought. That includes knowing what data models are trained on, who audits their outputs, and how decisions are explained.

The goal isn’t perfection. It’s clarity, accountability, and alignment with your values.

3. Open AI models are catching up and that changes the game

Until recently, the most powerful AI models came from a small group of companies with tightly guarded technology. If you didn’t have access to their proprietary systems, you were stuck waiting.

That’s no longer the case. In 2024, the performance gap between open models (available for anyone to use and build on) and closed models (owned and controlled by a few major players) shrank dramatically from 8% to just 1.7%.

This development opens up new possibilities: organizations do not need exclusive partnerships to start their AI initiatives. Open-source ecosystems like LLaMA, Mistral, and Phi-3 are putting high-performing capabilities within reach of in-house teams and mid-sized companies.

But this broader access also comes with new responsibility.

The question is no longer "Can we get access?" but "Are we ready to use it well?"

What to do?

- Create safe internal sandboxes for low-risk experimentation.

- Invest in talent that understands both the technical and ethical implications.

- Start embedding open models in non-sensitive workflows to build familiarity and confidence.

Access is not the differentiator. Execution is. A thoughtful corporate upskilling approach can close talent gaps while building internal capability to deploy AI responsibly.

4. AI is creating real impact, but it's not transformative by default

AI isn’t just a fringe experiment anymore. It’s being used across industries such as marketing, customer service, supply chains, and software development.

But here’s the nuance: While adoption is accelerating, returns remain modest for most. Companies report cost savings of less than 10% and revenue gains below 5%.

That doesn’t mean AI isn’t powerful. It means its impact is highly dependent on context, design, and intent.

Take two real examples:

- CrowdStrike has replaced parts of its technical support staff with AI, citing major efficiency gains.

- Meanwhile, Klarna reversed course, rehiring customer service staff it had previously laid off in favor of AI, after realizing the tech didn’t meet expectations.

AI isn’t a magic bullet. It’s a capability. And like any capability, its value depends on how well it’s integrated, where it’s applied, and whether it augments or replaces human strengths.

The key isn’t to chase what others are doing. Strategic foresight and dynamic organizational design can help avoid tech-first initiatives that fail to deliver.

5. The environmental cost of AI is now a boardroom issue

AI’s progress comes with a hidden cost: carbon.

Training GPT-4 produced over 5,000 tons of CO₂, while Meta’s LLaMA 3.1 generated nearly 9,000 tons. That’s a significant environmental impact, especially when measured against the average carbon footprint in many parts of the world.

Importantly, these emissions are not distributed evenly. The carbon cost of AI varies widely depending on where and how models are trained: local energy sources, infrastructure, and compute intensity all play a role. As AI adoption scales globally, so does its environmental responsibility.

The question has shifted from whether AI contributes to emissions to how your organization plans to manage, reduce, and report them responsibly.

That means:

- Prioritising efficiency when selecting models and infrastructure

- Asking vendors tough questions about energy use and emissions

- Integrating sustainability into your AI roadmap, not just your ESG report

The question has shifted from whether AI creates emissions to how your organization will own that impact, mitigate it, and report on it transparently.

From awareness to action

AI is advancing rapidly, but there is still time to lead with clarity.

The most impactful organizations won’t be the ones with the flashiest tools or the biggest budgets. They’ll be the ones that ask better questions, run smarter experiments, and integrate AI into their strategy, culture, and operations with intention.

We believe every corporation can design an AI strategy that’s not only bold and scalable but responsible and human-centered. That’s why we combine strategic foresight, business building, and capability development into every AI initiative.

If you would like to discuss how these changes can be integrated into your strategy, teams, and operating model, let’s talk.

This article is based on the 2025 AI Index Report by Stanford University’s Institute for Human-Centered AI (HAI). Licensed under CC BY-ND 4.0.For more comprehensive insights, refer to the full Stanford HAI AI Index Report 2025.

.webp?width=352&name=LinkedIn%20post%20(1).webp)